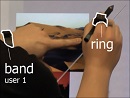

In this Vision (for the UIST 2021 Symposium on User Interface Software & Technology), I argue that “touch” input and interaction remains in its infancy when viewed in context of the seen but unnoticed vocabulary of natural human behaviors, activity, and environments that surround direct interaction with displays.

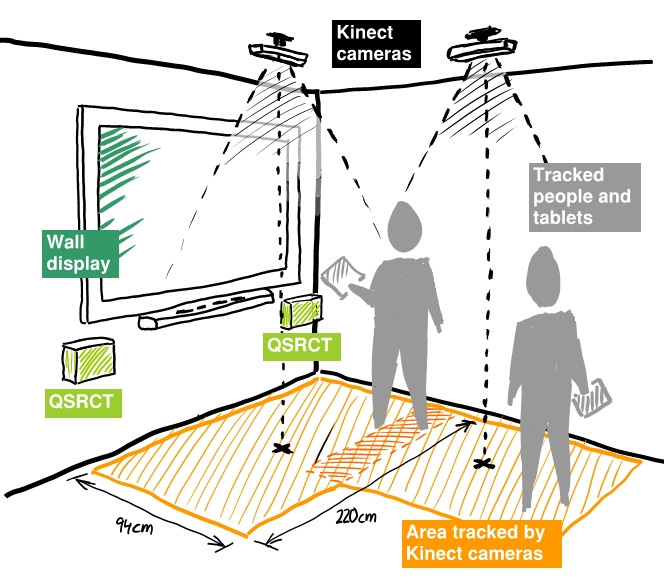

Unlike status-quo touch interaction — a shadowplay of fingers on a single screen — I argue that our perspective of direct interaction should encompass the full rich context of individual use (whether via touch, sensors, or in combination with other modalities), as well as collaborative activity where people are engaged in local (co-located), remote (tele-present), and hybrid work.

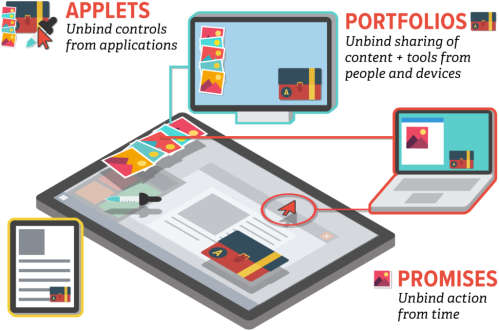

We can further view touch through the lens of the “Society of Devices,” where each person’s activities span many complementary, oft-distinct devices that offer the right task affordance (input modality, screen size, aspect ratio, or simply a distinct surface with dedicated purpose) at the right place and time.

While many hints of this vision already exist in the literature, I speculate that a comprehensive program of research to systematically inventory, sense, and design interactions around such human behaviors and activities—and that fully embrace touch as a multi-modal, multi-sensor, multi-user, and multi-device construct—could revolutionize both individual and collaborative interaction with technology.

For the remote presentation, instead of a normal academic talk, I recruited my friend and colleague Nicolai Marquardt to have a 15-minute conversation with me about the vision and some of its implications:

Watch the “Seen but Unnoticed” UIST 2021 Vision presentation video on YouTube

Several aspects of this vision paper relate to a larger Microsoft Research project known as SurfaceFleet that explores the distributed systems and user experience implications of a “Society of Devices” in the New Future of Work.

Ken Hinckley. The “Seen but Unnoticed” Vocabulary of Natural Touch: Revolutionizing Direct Interaction with Our Devices and One Another. UIST Vision presented at The 34th Annual ACM Symposium on User Interface Software and Technology (UIST ’21). Non-archival publication, 5 pages. Virtual Event, USA, Oct 10-14, 2021.

Ken Hinckley. The “Seen but Unnoticed” Vocabulary of Natural Touch: Revolutionizing Direct Interaction with Our Devices and One Another. UIST Vision presented at The 34th Annual ACM Symposium on User Interface Software and Technology (UIST ’21). Non-archival publication, 5 pages. Virtual Event, USA, Oct 10-14, 2021.

https://arxiv.org/abs/2310.03958

Frederik Brudy*, David Ledo*, Michel Pahud, Nathalie Henry Riche, Christian Holz, Anand Waghmare, Hemant Surale, Marcus Peinado, Xiaokuan Zhang, Shannon Joyner, Badrish Chandramouli, Umar Farooq Minhas, Jonathan Goldstein, Bill Buxton, and Ken Hinckley. SurfaceFleet: Exploring Distributed Interactions Unbounded from Device, Application, User, and Time. In Proceedings of the 33rd Annual ACM Symposium on User Interface Software and Technology (UIST ’20). ACM, New York, NY, USA. Virtual Event, USA, October 20-23, 2020, pp. 7-21.

Frederik Brudy*, David Ledo*, Michel Pahud, Nathalie Henry Riche, Christian Holz, Anand Waghmare, Hemant Surale, Marcus Peinado, Xiaokuan Zhang, Shannon Joyner, Badrish Chandramouli, Umar Farooq Minhas, Jonathan Goldstein, Bill Buxton, and Ken Hinckley. SurfaceFleet: Exploring Distributed Interactions Unbounded from Device, Application, User, and Time. In Proceedings of the 33rd Annual ACM Symposium on User Interface Software and Technology (UIST ’20). ACM, New York, NY, USA. Virtual Event, USA, October 20-23, 2020, pp. 7-21.

Haijun Xia, Ken Hinckley, Michel Pahud, Xioa Tu, and Bill Buxton. 2017. WritLarge: Ink Unleashed by Unified Scope, Action, and Zoom. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI’17). ACM, New York, NY, USA, pp. 3227-3240. Denver, Colorado, United States, May 6-11, 2017. Honorable Mention Award (top 5% of papers).

Haijun Xia, Ken Hinckley, Michel Pahud, Xioa Tu, and Bill Buxton. 2017. WritLarge: Ink Unleashed by Unified Scope, Action, and Zoom. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI’17). ACM, New York, NY, USA, pp. 3227-3240. Denver, Colorado, United States, May 6-11, 2017. Honorable Mention Award (top 5% of papers).

Yann Riche, Nathalie Henry Rich, Ken Hinckley, Sarah Fuelling, Sarah Williams, and Sheri Panabaker. 2017. As We May Ink? Learning from Everyday Analog Pen Use to Improve Digital Ink Experiences. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI’17). ACM, New York, NY, USA, pp. 3241-3253. Denver, Colorado, United States, May 6-11, 2017.

Yann Riche, Nathalie Henry Rich, Ken Hinckley, Sarah Fuelling, Sarah Williams, and Sheri Panabaker. 2017. As We May Ink? Learning from Everyday Analog Pen Use to Improve Digital Ink Experiences. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI’17). ACM, New York, NY, USA, pp. 3241-3253. Denver, Colorado, United States, May 6-11, 2017.