SurfaceFleet is a multi-year project at Microsoft Research contributing a system and toolkit that uses resilient and performant distributed programming techniques to explore cross-device user experiences.

With appropriate design, these technologies afford mobility of user activity unbounded by device, application, user, and time.

The vision of the project is to enable a future where an ecosystem of technologies seamlessly transition user activity from one place to another — whether that “place” takes the form of a literal location, a different device form-factor, the presence of a collaborator, or the availability of the information needed to complete a particular task.

The goal is a Society of Technologies that fosters meaningful relationships amongst the members of this society, rather than any particular device.

This engenders mobility of user activity in a way that takes advantage of recent advances in networking and storage, and that supports consumer trends of multiple device usage and distributed workflows—not the least of which is the massive global shift towards remote work (bridging multiple users, on multiple devices, across local and remote locations).

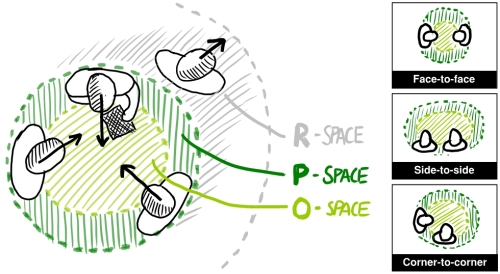

In this particular paper, published at UIST 2020, we explored the trend for knowledge work to increasingly span multiple computing surfaces.

Yet in status quo user experiences, content as well as tools, behaviors, and workflows are largely bound to the current device—running the current application, for the current user, and at the current moment in time.

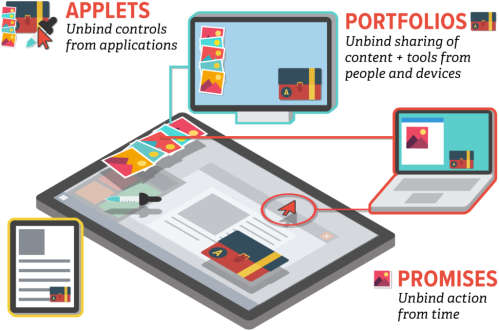

This work is where we first introduce SurfaceFleet as a system and toolkit founded on resilient distributed programming techniques. We then leverage this toolkit to explore a range of cross-device interactions that are unbounded in these four dimensions of device, application, user, and time.

As a reference implementation, we describe an interface built using Surface Fleet that employs lightweight, semi-transparent UI elements known as Applets.

Applets appear always-on-top of the operating system, application windows, and (conceptually) above the device itself. But all connections and synchronized data are virtualized and made resilient through the cloud.

For example, a sharing Applet known as a Portfolio allows a user to drag and drop unbound Interaction Promises into a document. Such promises can then be fulfilled with content asynchronously, at a later time (or multiple times), from another device, and by the same or a different user.

This work leans heavily into present computing trends suggesting that cross-device and distributed systems will have major impact on HCI going forward:

With Moore’s Law at an end, yet networking and storage exhibiting exponential gains, the future appears to favor systems that emphasize seamless mobility of data, rather than using any particular CPU.

At the same time, the ubiquity of connected and inter-dependent devices, of many different form factors, hints at a Society of Technologies that establishes meaningful relationships amongst the members of this society.

This favors the mobility of user activity, rather than using any particular device, to achieve a future where HCI can meet full human potential.

Overall, SurfaceFleet advances this perspective through a concrete system implementation as well as our unifying conceptual contribution that frames mobility as transitions in place in terms of device, application, user, and time—and the resulting exploration of techniques that simultaneously bridge all four of these gaps.

Watch SurfaceFleet video on YouTube

Frederik Brudy*, David Ledo*, Michel Pahud, Nathalie Henry Riche, Christian Holz, Anand Waghmare, Hemant Surale, Marcus Peinado, Xiaokuan Zhang, Shannon Joyner, Badrish Chandramouli, Umar Farooq Minhas, Jonathan Goldstein, Bill Buxton, and Ken Hinckley. SurfaceFleet: Exploring Distributed Interactions Unbounded from Device, Application, User, and Time. In Proceedings of the 33rd Annual ACM Symposium on User Interface Software and Technology (UIST ’20). ACM, New York, NY, USA. Virtual Event, USA, October 20-23, 2020, pp. 7-21. https://doi.org/10.1145/3379337.3415874

Frederik Brudy*, David Ledo*, Michel Pahud, Nathalie Henry Riche, Christian Holz, Anand Waghmare, Hemant Surale, Marcus Peinado, Xiaokuan Zhang, Shannon Joyner, Badrish Chandramouli, Umar Farooq Minhas, Jonathan Goldstein, Bill Buxton, and Ken Hinckley. SurfaceFleet: Exploring Distributed Interactions Unbounded from Device, Application, User, and Time. In Proceedings of the 33rd Annual ACM Symposium on User Interface Software and Technology (UIST ’20). ACM, New York, NY, USA. Virtual Event, USA, October 20-23, 2020, pp. 7-21. https://doi.org/10.1145/3379337.3415874

* The first two authors contributed equally to this work.

[PDF] [30-second preview – mp4] [Full video – mp4] [Supplemental video “How to” – mp4 | Supplemental video on YouTube].

[Frederik Brudy and David Ledo’s SurfaceFleet virtual talk from UIST 2020 on YouTube]